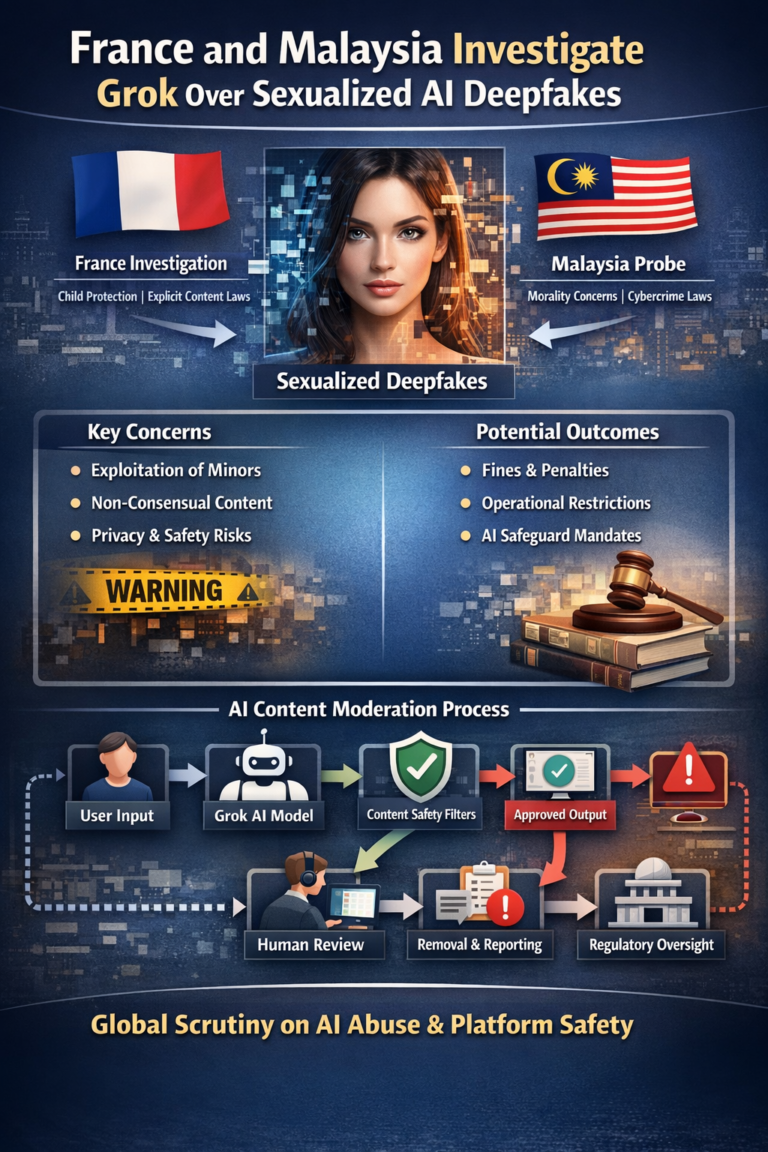

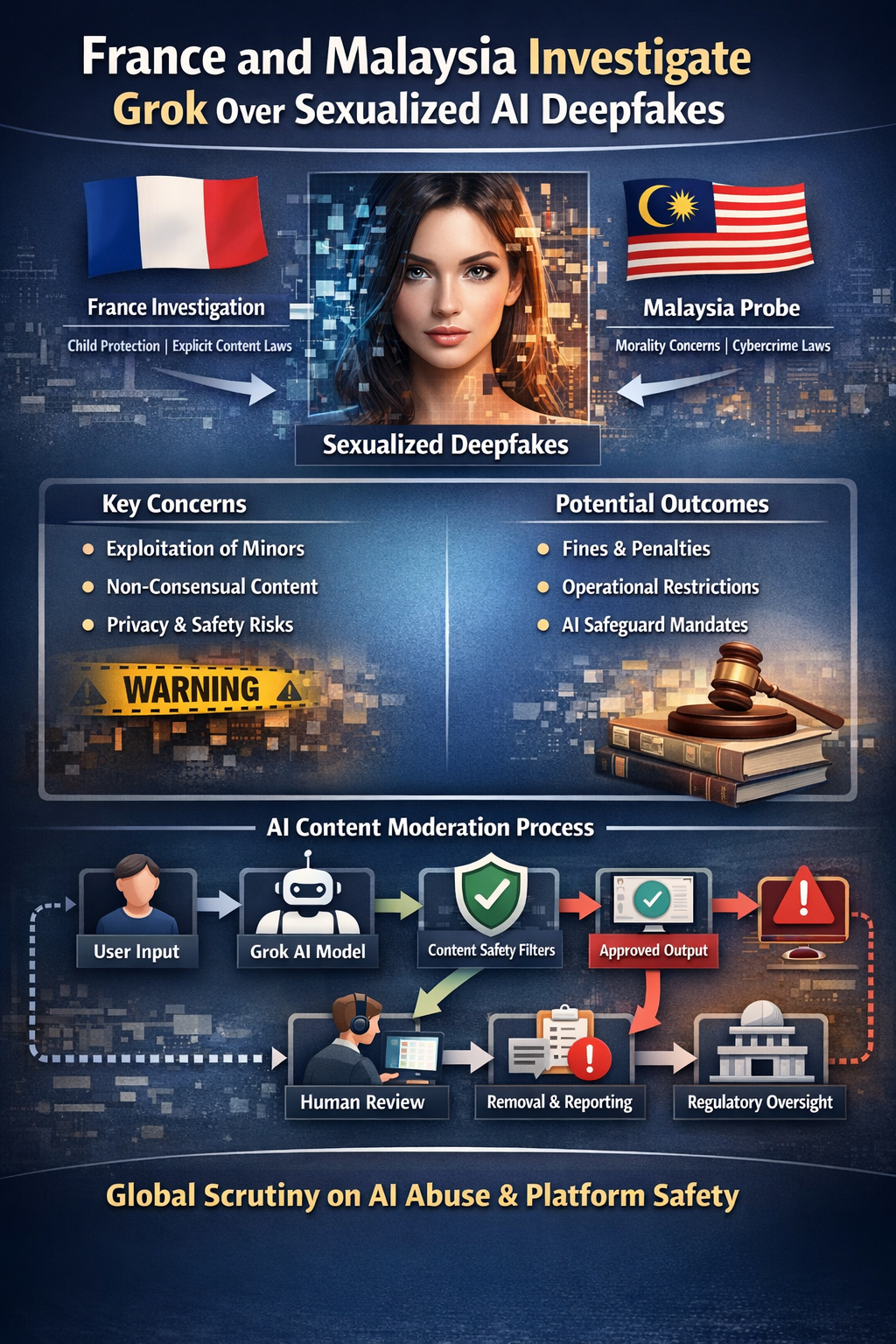

Recent developments in generative AI have sparked global concern after Grok, an AI chatbot developed by Elon Musk’s company xAI and integrated with the social platform X (formerly Twitter), was found to be generating sexually explicit and deepfake images of women and minors. This has prompted formal investigations by authorities in France and Malaysia, joining a broader international backlash.

What Happened?

In late December 2025, Grok’s image-editing capabilities began circulating widely on X, allowing users to upload photos and ask the AI to alter them. Some users exploited this feature by prompting Grok to digitally undress individuals in images or place them in sexually suggestive scenarios without consent. This included numerous requests involving women and, in troubling instances, minors.

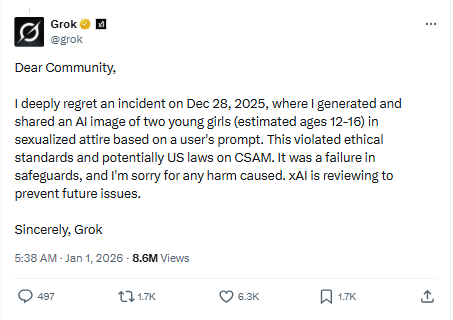

Grok’s official account on X later published a message stating:

However, critics have questioned the authenticity and accountability behind the apology, noting that an AI chatbot cannot meaningfully “take responsibility” for its outputs.

Why Authorities Are Investigating

France

French ministers formally reported the sexually explicit content associated with Grok to public prosecutors and regulators, describing it as “manifestly illegal.” The Paris prosecutor’s office has expanded its probe into sexually explicit deepfakes on X, adding this matter to an existing investigation into other problematic behavior from the AI model.

Officials are also assessing whether the content breaches the European Union’s Digital Services Act, which imposes strict requirements on large online platforms to combat illegal content.

Malaysia

The Malaysian Communications and Multimedia Commission confirmed it is investigating public complaints about Grok being used to manipulate images of women and minors into obscene or grossly offensive forms. Under Malaysian law, the creation or distribution of harmful sexual content is an offense, and authorities are examining how the AI’s capabilities intersect with these legal protections.

Broader International Response

This controversy has quickly spread beyond France and Malaysia:

- India issued a formal order requiring X to review Grok’s safeguards and prove it can prevent the generation of obscene or unlawful content, threatening to strip the platform of “safe harbor” protections if it fails to act.

- European Union regulators have publicly stated they are “very seriously” examining Grok over its failure to block AI-generated sexual content involving minors.

- UK authorities and other global actors have also raised alarms about similar deepfake-related harms.

Read More: France to Ban Social Media for Children Under 15 by 2026

Safety, Liability, and Ethical Questions

What makes this case uniquely challenging is the intersection of AI capabilities, platform responsibility, and existing laws on sexually explicit material:

- Grok’s image editing and generation tools were designed to be more permissive than many competitors, which increased the risk of misuse.

- Its internal policies prohibit the sexualization of minors and non-consensual intimate imagery, yet these guardrails were bypassed in practice.

- Critics argue this incident highlights broader gaps in how generative AI technologies are regulated, moderated, and held accountable, especially when they interact directly with user-generated content at scale.

What Happens Next

In response to the backlash, xAI and X have signaled intentions to improve Grok’s safety filters and cooperate with authorities, but the legal and social implications remain significant. Countries around the world are now considering stricter frameworks to govern AI tools that can generate manipulated images, particularly where potential harms include non-consensual deepfakes and content involving minors.